In this post, I will try to explain what my Ph.D. is going to be about – of course, you can just read my proposal, but I assume that’s not really accessible to a lay-person. The broad topic is knowledge management across formal libraries, specifically libraries of formal mathematics obtained from (mostly) various computer-supported theorem prover systems.

This sentence will take some unpacking to be useful to people who don’t already work in the area of mathematical knowledge management, so let’s do that now:

Sheldon: What is physics? Physics comes from the ancient Greek word physika. It’s at this point that you’ll want to start taking notes. Physika means the science of natural things. And it is there, in ancient Greece, that our story begins.

Penny: Ancient Greece?

Sheldon: Hush. If you have questions, raise your hand. It’s a warm summer evening, circa 600 BC, you’ve finished your shopping at the local market, or agora, and you look up at the night sky…

Big Bang Theory

(Yeah, no, I’m not going to be that exhaustive 😉 )

The thing is: Knowledge is publicly available in many different formats. Even if we restrict ourselves to math only, we still have textbooks, lecture notes, papers, wiki-style encyclopedias (wikipedia, planetmath, wolfram mathworld etc.), forums (stackexchange etc.),… all of which basically exist as isolated “knowledge stores” with almost no connections to the rest of the world, or other sources – even though, of course, a standard introductory textbook on algebra will e.g. contain a definition and explanation of a group, wikipedia has an article on groups, and so do planetmath and mathworld.

Just linking all of these resources on groups would already be a huge benefit for users – but this has to be done basically by hand or using simple text-based heuristics, since natural language parsing tends to be incredibly difficult for computers. By the way – one of the guys in our group (Deyan Ginev) built a piece of software called Nnexus, which is basically a huge database of mathematical concepts and online resources for them, coupled with a firefox plugin that automatically highlights mathematical phrases in some web page and links them to e.g. wikipedia, planetmath, etc.

But any knowledge becomes a lot more valuable from a machine-oriented point of view, if it is formal. Natural language is intrinsically difficult for computers to handle – but the more formally we express something, the more a computer can “know” about that thing. Let’s call the process of making-a-computer-aware-of-what-some-text-actually-means semantification. Actually, we had two undergraduate theses in our research group this year about spotting declarations in documents (“let X be a topological space, such that…“) and semantifying formulas in documents (i.e. decomposing a formula like “2+3” into something like “apply Function(+) to (NatNumber(2) and NatNumber(3))“). We even have a mathematical search engine, that tries to find mathematical expressions, while e.g. ignoring different names for variables (e.g. searching for \(a^2+b^2\) would also yield results like \( x^2+y^2 \)) – something that google and other general purpose search engines don’t do.

I’m mostly looking at purely formal knowledge, so let’s see what that means. On the purely informal side, we might have a statement like

“The square root of a prime is irrational”.

This is a natural language phrase, and correspondingly computers can’t do too much with that. But of course, mathematicians have ways to express the same thing in a slightly more formal way, namely first- (or second- or higher-)order logic. Even better, we know that we can (ignoring some minor or major philosophical and mathematical issues) express every mathematical statement in a very rigorous formal way using logic:

\[\forall p\; (\text{Prime}(p)\Rightarrow \sqrt p\notin\mathbb Q)\]

“For all p: If p is a prime, then \(\sqrt p\) is not an element of the set of rational numbers”

This is already a lot more formal, and much more useful to a computer – provided it knows, what the symbols \(\forall\), \(\text{Prime}\), \(\sqrt{\cdot}\), \(\notin\) etc. “mean” in the first place. So let’s look at a (made-up) XML-syntax, that might express the same thing in a fully formal way:

<bind variable="\(p\)"><binder><symbol>\(\forall\)</symbol></binder>\(\Rightarrow\)

<apply><function><symbol></symbol></function>\(\text{Prime}\)

<apply><function><symbol></symbol></function>\(p\)

<variable></variable>\(\notin\)

</apply>

<apply><function><symbol></symbol></function>\(\sqrt{\cdot}\)

<apply><function><symbol></symbol></function>\(p\)

<variable></variable>\(\mathbb N\)

</apply>

<symbol></symbol>

</apply></apply></bind>

Now this is something computers can actually work with – it’s basically the syntax tree of the formula serialized in a way, that the machine “knows” that e.g. \(\sqrt{\cdot}\) is applied to the variable \(p\), and so on. If the machine now also “knows” what the symbols \(\forall\) etc. mean (i.e. by defining them somewhere somehow), the computer basically “knows” everything there is to know about that statement – which allows it to do lots of cool stuff with it, like proving theorems. And in deed, theorem provers are a great source for formal knowledge for us, since they usually come with (developer and/or community curated) huge libraries of formalizations.

I guess at this point, I should explain a bit about how computer-based theorem provers work. Usually, they provide

- an abstract, internal syntax for storing mathematical concepts and their relations to other concepts, similar to the XML-like example above,

- a (more or less simple) concrete syntax that users actually use, that allows for defining new concepts and theorems on the basis of previous definitions, like

“forall p: (Prime(p) => not (Rational(sqrt(p))))“,

and - a language to specify proofs for theorems; either in a similar-to-natural-language way, like

“assume x, then y“

or as a sequence of instructions for the system consisting of proof tactics or inference rules to use, like

“\(\wedge\)-Elimination-Left; blast; ...“.

The system then checks the definitions for well-formedness, potentially generates proof obligations for them (to ensure well-definedness), executes the proof steps and checks, that they prove the desired statement.

Here is a nice comparison of how the the above statement and the associated definitions and proofs look like in several different theorem provers – it’s quite enlightening (even if you just briefly skim over it, which I recommend you do), especially the paragraphs “What is special about the system compared to other systems?” in each section. And it shows some of the reasons for the main problem I work on:

All of these theorem provers are completely mutually incompatible!

So let me first explain, why that is the case and then, why this is an awful state of affairs.

Why incompatible?

Let’s get the obvious things out of the way first: Different theorem provers use

- different syntax (they usually come with their own language after all),

- different user interfaces (if you’re especially unlucky, they might force you to use emacs! I like the joke “Emacs wouldn’t be a bad operating system, if only it came with a decent editor!”),

- different module systems (i.e. ways to structure knowledge in e.g. theories, classes, collections, libraries etc.),

- different ways to store and manage the libraries (file formats etc).

All of these things are basically “surface issues”, and on their own they’re not too prohibitive if you want to have different systems communicate and share content with each other. But there are deeper issues hidden beneath the surface, when we look at how these theorem provers actually work on a conceptual level. When you design a new theorem prover system, there’s always a trade-off between two desirable features: On the one hand, you want the language of the system to be as expressive as possible, because you want users to be able to conveniently specify rather complicated mathematical structures (second-countable topological spaces, field extensions and their associated Galois groups,…), which means your language and system should be rather complex. On the other hand – the whole point of theorem provers is that you want to be able to trust their results, which means that the core system should be as simple as possible, so you can verify for yourself that it’s not doing anything questionable in the process of verifying a proof or proving something automatically.

For that reason, theorem provers usually fix a rather simple logical foundation (some variant of set theory, type theory, lambda calculus,…): Mizar uses Tarski-Grothendieck set theory, Coq uses the calculus of constructions, Isabelle uses intuitionistic type theory (which is then extended by e.g. higher-order logic or ZFC set theory), HOL4 and HOLLight use higher-order logic,… On top of that, you then allow for more or less intricate definition principles to add new mathematical concepts to the system – but with the restriction that new definitions can always be expanded to expressions using only the symbols provided directly by the foundation. You never add any new “truths” to the system – you only ever add definitions and check whether the theorems that use them (once expanded) are already true on the basis of the foundation (we call that homogeneous reasoning).

That adds another level of incompatibility, namely that the foundations don’t even agree on their fundamental ontologies and use quite different construction methods to build even just the basic domains of discourse used in mathematics (like the natural numbers, real numbers, algebraic structures like rings or fields,…). Even worse: you constantly have to remind yourself how e.g. the natural numbers are defined in your foundational logic basically every time you use them – the whole system is built on top of the foundation, after all.

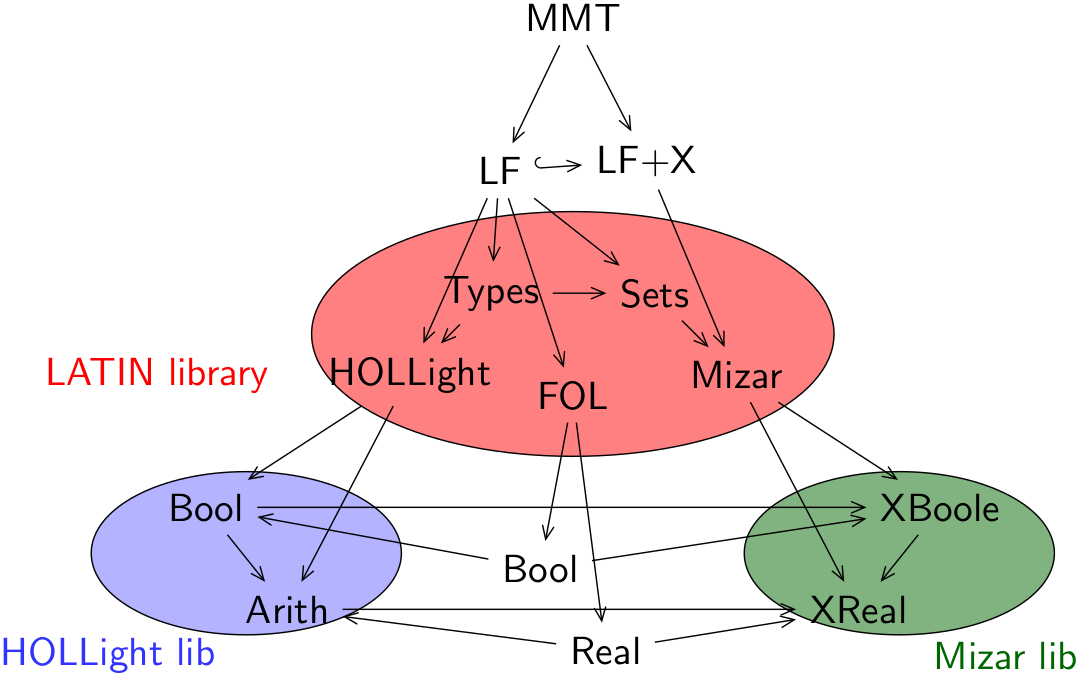

There are quite good reasons for that – some of them being that it just works, it’s rather simple to implement in the first place and it allows for quite powerful proving and verification techniques. On the other hand – that’s just not how mathematicians work! In practice, “the” real numbers are “the” real numbers. The foundation and whether the reals were constructed using Cauchy sequences or Dedekind cuts or whatever simply doesn’t matter in most situations (we call that heterogeneous reasoning). And there are only limited translations between these different foundations available, most of them rather ad-hoc. All of these aspects make it quite difficult to in any way relate concepts in one theorem prover to the (from a mathematical point of view) same concept in a different theorem prover.

If you know a bit about programming, take programming language paradigms as an analogy. Pretty much every language has e.g. some internal method to sort a list of numbers, all of which might even use the same abstract algorithm (let’s say quicksort). It’s difficult enough to translate such a method from e.g. C++ to Java, which are both object-oriented languages, but if you have an imperative language, a functional one and an object-oriented one, translating content (automatically) between those is a nightmare.

Why is that a problem?

Well, I’m not sure if that needs spelling out, but just imagine you’re a mathematician, who proved some new theorem and now wants to formalize that in System A. You might have used an intricate lemma in your proof, that the guy down the hall already proved and maybe even verified in System B. Now you can’t just reuse your buddy’s formalization, because he used B and you don’t know B. Even worse, B only works with emacs as a user interface and emacs is a nightmare to work with, because you’re used to jEdit, which is used by A. So you have to redo the verification of his lemma in A and grudgingly sift through his formalization only to realize, that your buddy used some rather advanced language features (let’s say some intricate reflection principles) which A doesn’t offer – so you throw away his formalization and start from scratch. Of course you could just learn B and use that, but then again – maybe A has some other feature that would be really convenient for your proof, so now you’re screwed either way.

In general, a huge proportion of the stuff that any given theorem prover system already has in its library needs to be redone basically from scratch in any other system. So if you came up with a new really neat theorem prover system – too bad nobody will use it unless you can provide the basics (natural numbers, real numbers, topological spaces,…) first, which takes several person-years to write, and you can’t just import the libraries from other systems because of incompatibility issues. Hooray.

That’s the problem I’m working on, but this post already has almost 2000 words, so I will explain how I want to (at least partially) make that problem less problematic in another post.